How AI is affecting and will affect Surgical Robotics (Exclusive Content)

- Steve Bell

- Feb 6, 2024

- 17 min read

Updated: Jul 30, 2025

So what do we really mean when we talk about AI (Artificial Intelligence) in surgical robotics?

Let's start by defining a bit better what AI is in robotics. AI is defined in a few ways but this one is quite nice

"Artificial intelligence (AI) is the intelligence of machines or software, as opposed to the intelligence of humans or other animals. It is a field of study in computer science that develops and studies intelligent machines. Such machines may be called AIs"

When many people think of AI they think of super intelligence - when others think of AI they think that you turn on a machine - and it just learns from the world around it. That's not quite how it works today. One day they do hope that AI will be like a new born baby - that just starts learning any general thing (General AI).

The reality is that today - and in surgical robotics - we are talking about a much narrower AI that learns from data sets - and then can help to "do things" - today those things are limited but already useful.

In a simplistic way to think of this - you can imagine taking a pretty dumb product - applying this kind of software and giving it a level of "smarts" to make the product help the user in much better ways. Sometimes in ways that us "Humans" didn't even think about. One of the interesting things about AI is that it often thinks like humans, but sometimes in surprising ways. It can also often scour data and see things that our human biases make us blind to. And this can leads to exciting insights. Alpha Go is a brilliant example, like when the world champion was shocked by a sudden winning move it made during a ply off with him.

It can also take vast quantities of raw data and make "sense" of what it is seeing - and that can lead again to being able to condense all that data down - apply a bit of intelligence to it - and produce a sensible result that a surgeon could act on. And that is the important bit. AI for AI's sake is not useful. But delivering the insights that change clinical practice for the better is.

Let me give an example:

Imagine that we could collect all the data from every operation there's ever been - collecting all the images ever taken - all the hand movements - all the instruments used. Then imagine we could link it to the patient's records and outcomes. We could look at the history of - let's say Lap Chole - and see all of the last 30 years of cases across the world - all in one place. Now imagine that we ask an intelligent system to scour all of that data and look for some kind of hints and patterns that tell us why some people got really bad results and other great results.

Now of course a human researcher could do something similar - suspecting that the bad results are to do with poor patient selection (let's say for the sake of this example). They go off and try to correlate the poor patient selection to poor outcomes - and now we already have a human bias in there. It would take them a pretty long time to review all the patient records - the videos - the notes of all those cases. And we may end up with a human biased correlation. But we may also miss a pattern in there that AI would have picked up.

(Attention AI can be totally biased by training sets and how we set it up. So I'm being very fanciful here on purpose.)

But if done right - AI could be used to scour that data - and be asked to come up with a ranking of what correlates to bad outcomes. It goes off and surprisingly - it comes back and says...

Actually it looks like the worst outcomes happen if you have a lap chole on a late Friday afternoon session when everyone is tired and the surgeon then goes off for the weekend - leaving a junior to care for complications (just for a silly sake of argument).

That insight - that we humans may have missed - could lead to a change of practice so that no one does a Lap Chole on a Friday afternoon late session. And that information could be disseminated to all surgeons world-wide in a bulletin, leading to a rapid change of practice.

Now with this example I can hear you saying - "But we can't go back and get all those videos, and patient records, and movements, and instruments." Because with lap surgery most of that data was just discarded. But robots have been collecting a lot of that information for the past years - and storing it in vast data lakes. Intuitive has a deep record of millions of cases... Most new robots are also collecting that data - systematically. So this is where we start to see applications of AI in surgical robotics that are real.

What AI is actually being used for today in surgical robotics?

It may seem surprising to those not following closely to surgical robotics, But AI is already in use every day on these systems.

Today we have systems from companies like ASENSUS that are using sophisticated image recognition systems combined with AI - they call it "Performance guided surgery"

They use an AI system that they call augmented intelligence - which is using image processing and assisted software to help the surgeon make better decisions. It's a starting point where robots are helping the "Pilot" with early and basic "GPS" but this alone can start to help make better decisions and to avoid some mistakes.

Touch Surgery by Medtronic is another video based system that uses AI to start to segment surgical procedures, plus anonymise them to remove any patient identifiable information. It also starts to segment videos and look for areas of improvement by analysing that segmented data by user.

This could include timing of port insertion, or dissection of key structures. Through AI it can work out the critical information and then compare that to the rest of the world's data sets to see if a surgeon is above or below average for that data point. This can already be an early but useful insight.

All of this image based analysis is aimed at giving surgeons "on the fly" and post operative augmented data to work out better surgical strategies, work out areas of improvement, and benchmark to best in class.

Moon Surgical - although early in their commercialisation - intends to use image based analytics and AI combined with some instrument and arm force measurements to start to give insights to surgeons through their Maestro system during manual laparoscopy.

Several other companies are already also using AI combined with their robot's telemetric data to start to give surgeons, hospitals and C-suite detailed analysis and insights into the use of their system. One of the leaders is Intuitive - with their my Intuitive app. Using AI combined with rich system data to give in depth case analysis, and comparisons to other users. This allows initial insights into the usage of product, procedure metrics and other information. The AI is used to segment out the data and start to provide trend insights vs other global users. It allows a surgeons to know where they stack up against the rest of the world - and importantly can monitor their own progress, especially through the initial learning curves.

One of the keys to AI in these data apps is that they start to give insights to users about usage and patterns within that usage that can be applied to practice. Often this is difficult for manual observers to look at these subtle trends - but with AI on specific data sets - including the hospital's own data sets, insights that are meaningful to the user in their own reality are being delivered.

One of the key things about these apps is their almost realtime analytics that allow much faster insights to be given back to the surgeon so they can adapt their practice - improve and get better outcomes. In the past the feedback loop of clinical trails - data collection - analysis and publication was almost glacial. Today people want fast actionable insights that adapt over time - and relevant to their reality. But ... this is really just the beginning.

These are the obvious and in your face applications for AI today - but what is happening under the hood of the robots is where some of the fascinating applications of AI come in. Take preventive maintenance. Throughout every single case... the robot is throwing off masses of telemetric data. Hand controller angles, Joint angles, forces, temperature, power and a massive amount of sensor data. One of the applications of AI today is to look for patterns that lead to robot arm failures. This data can flag - thanks to AI - the need for an arm to be serviced way before a surgeon sees a degradation in performance. This can lead to servicing interventions that prevent robots being down for cases - or worse - during cases.

AI is also used to sift the data and feedback trends, and insights back to the engineers so they can bring those learnings into the design process for the next generation of arms. (A little more later on this)

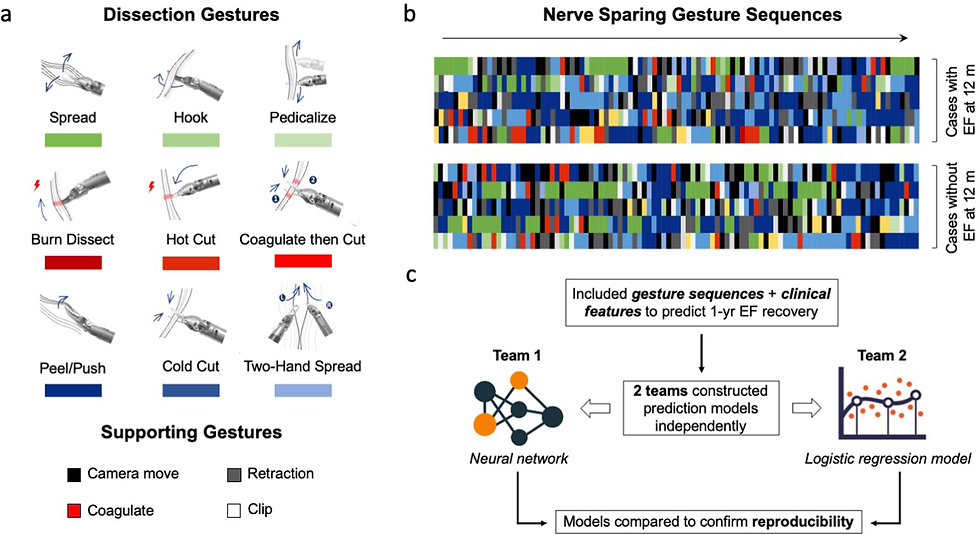

One other application that is happening today - is using AI to auto segment video to give a form of digital "fingerprinting" of steps, segments, actions during a procedure. This can lead to deep benchmarking insights that start to inform which segments of a procedure need to be performed best (and in which order) so that insights into "action and effect" can start t be correlated. The software learns from huge video data sets - often with human tagging of the cases as part of the learning process. But the AI can then start to learn to segment without human intervention and that is where it gets exciting.

This data when matched up to surgical outcomes can start to show trends that tell users important information about what steps lead to which outcomes. We start to be able to get to cause and effect and what would be a gold standard to drive best outcomes.

The next phase of AI in Robotics

So now I'm going to move away from what is happening day in and day out... and move to more speculation of what is just around the corner in surgical robotics with AI. So strap in.

Scene recognition will get better

The first and obvious area where AI will help is in better scene recognition within the live visual feeds coming off robots. As Nvidia dives deeper into supporting medtech companies with their imaging systems - we will quickly see improvements in advanced scene recognition. This will include:

Tissue boundary recognition: Where the AI will be able to understand what is a common bile duct , or a cystic duct, or connective tissue etc and be able to highlight in real time (like a GPS overlay) where different structures are present. It can colorise them and create boundaries - and even tag them for novice users.

Healthy tissue - diseased tissue boundaries: The AI combined with beyond human visual abilities - using ICG, Hyperspectral imaging, high contrast recognition, super high definition - will be able to start to determine healthy tissue verses pathologic tissue - and give insights to the robotic user. This will be useful for margins of excision, or ensuring anastomosis are preformed on viable tissue with adequate blood flow..

Surgical segmentation in more details: The AI will not only be able to help with recognised segmentation (as above) - such as "You are dissecting" but I will start to be able to have the systems to determine if it is "good dissection" or "bad dissection". If it might lead to excessive bleeding. If the suture line is "good or bad." This will probably require initial human tagging of video segments and rankings and ratings - but as the AI learns it will start to be able to interpret these parts of the procedure itself and give a score back to the user. Potentially this can happen in real time so the AI can say "Erm doc... you might want to run that suture line again..."

Optimising instrument usage

Instrumentation choice: AI will be to determine what instruments are in the surgical field - and be able to start to say if the right instrument (tool) is being used for the right thing. If it is being used effectively - or could be used better. It can also start to determine what tool - in which surgical segment and at which part of the segment. This can allow it to generate suggested "next tools" on "suggested robotic arm." This will help the surgeon and the OR team to improve efficiency.

Bespoke inisghts

With this better visual recognition - the AI will start to be able to anlalyse all of that visual data and automatically start to generate bespoke insights based upon the user they have operating on the robot. They will be able to determine levels of expertise of users by understanding the kinematic data of their hand movements combined with the visual scene. They will be able to give near human expert interpretation of the "level of skill and expertise." Combine it with other data sets like "How many robotic procedures the surgeon has done" - and then with the medical records to see their previous outcomes (in near real time, say through the learning curve as one use case). This will allow AI to then determine the level of assistance it needs to give in terms of prompts and what assistance the robot needs to give in terms of manoeuvres. In novices with poor results it may ramp up the "help" and give more step by step guidance. In very novice surgeons it may generate way more restrictive no fly zones and much stronger intervention asking "Are you sure you want to do that? 98% of your peers would not - and those that do get a 2% higher complication rate."

The subtly of AI intervention and help will need to be tailored to the skill and experience of the user. A super expert user that is at the top of their game may get frustrated with alarm , alert and help suggestion overload. Where as a junior surgeon may be very grateful for all the help and guidance they can get. But the AI will adapt levels of help and intervention with the surgeon as they gain more skills (also through suggested training modules in the simulator) and adjust the level of help as the user needs less. It will be able to interpret the "surgeon" and understand the level of proficiency, and adjust the help and support needed.

At certain points the AI will be able to look across the "hive" of total users - and start to also be able to suggest the right proctors to be matched to the user and put in contact with them (on the fly) to resolve specific issues. AI will be able to tailor all levels of help and support, including specific human intervention as needed.

The nth degree of this - could ultimately be that AI can become the gatekeeper to the robot. This is one unique feature of robots compared to lap. They are the system between the surgeon and the patient. It is not beyond the realms of fantasy that AI will recognise the user, look at their historic data - see a massive downward trend in peri-operative skills and post operative outcomes. At some point it can alert the hospital - and if this "warning" is not corrected then it could - ultimately - not allow activation of the system for potentially "dangerous" surgeons. In the chart below the plots above the line could trigger the AI to say "hold on".Too far? Too much big brother?

Post surgical surgeon and team support

Next AI will get smarter in understanding the help surgeons and teams needs post surgery. For surgeons that could be suggesting very specific remedial / supportive training via the simulators. For teams it could start to find areas where workflow could be improved - such as changing scheduling of procedures in the list, or running certain procedures only on certain days. There will be lots of insights that come out that we don't even understand yet. But it is one of the facets of AI that often "they" see things that we don't always see as humans. The black box of the neural network often throws up surprises and insights that can help us. I suspect that AI will also start to be able to pull out efficiency of the procedures and give procedural step sequences - to instrument usage suggestions - to other parts of the patient flow within the operating procedure.

Creation of tissue boundaries and intervention

Once surgical scene recognition gets to a certain level, and 3D depth perception is achieved by AI. The AI will help to set up structure boundaries in topographical maps in the body. It could be assisted by CT or MRI pre operative scans to get a general lay of the land. But it may require real time image processing (maybe supported by advanced image systems like structured light for 3D or laser speckle systems for blood flow) - but sooner or later AI will recognise 3D structures with depth, and perfusion and build geo fences or no fly zones around them.

These 3D no fly zones will serve multiple purposes - but ultimately it will be to improve safety. Imagine that over major blood vessels the AI creates zones where any sharp instrument cannot enter the zone. Or electrosurgery cannot be activated. It may require the user to hit an override function to continue - as they may be a vascular surgeon who wants to open a vessel. Or AI could protect the common bile duct in cholecystectomy in real time. This is way more useful in robotics as the robot can intervene and disconnect the surgeon from the arms and instruments - stopping the procedure. That allows a strong "pause for thought" and a positive reengagement required by a surgeon. That is very different than a flash up warning in lap surgery that can easily be ignored. It is that combination of AI safety and robot intervention that could make a huge step forwards in patient safety.

In another application, the AI could help to identify healthy and diseased tissue and could help to "nudge" the instruments (scissors let's say) to the exact boundary between the tissues to get the best possible tissue margins for tumours, but leaving as much viable tissue as possible. Precision survey at its maximum.

Road to autonomous surgery

In talking about "nudging" instruments. That can be thought of in cars today when you steer and the lane assist kicks in to keep you in the right lane. This slow march to autonomous cars is an analogue of what I believe will happen (is happening) in robotic surgery.

AI will be used in several ways - such as nudging the scissors for better margins - to automated suturing to get perfect suture placement - to automated knot tying - but based upon the real time situation. Not just the same knot or suture line being repeated from a set of standard instructions - but each one tailored to the actual tissue - the surgical scene - procedure by procedure based upon global best practices. I may not be explaining this well. But it will be AI intervention with bespoke autonomous surgical manoeuvres that will bring precision bespoke surgery to the patients.

We will see AI start to take more and more control of key procedure steps as it improves and we move towards autonomous surgery. AI will be the trigger and enabler for autonomous surgery when it can string all of the above together - identifying structures - knowing the best sequence of procedure steps with the right instruments used in the most efficient way then delivering the best bespoke surgical manoeuvres. We will start to see smart autonomous procedures that will reduce errors and improve the speed of procedures. Perhaps the AI even reaching out to other AI systems or users for assistance when it gets into highly difficult situations. AI phone a friend.

I imagine it will then enter into a smart self learning loop as it uses AI to look for improvements in post operative data and feeds that all back into patient selection - pre-operative 3D planning - best procedure scheduling - best peri-operative procedure practices - best post operative course to follow. With the smart robot at the very heart of this feedback loop.

Maintenance, supply and design iterations

I'll expand a little more on what's already happening today with preventative maintenance diagnostics. None of the above will improve efficiency if the system is down, or instruments are not available for the case. So AI will also be applied even more heavily to the back end of the robots (some of this has been happening for years at Intuitive) But more robots will have this kind of diagnostics smarts. Huge amounts of telemetric data are flowing back from the systems every single day. Within that stream of data are signs and indications of issues with the system. Not visible to the human user - but patters that show a potential failure some time in the near future. By having AI look across the fleet in real time, the systems can start to predict maintenance more efficiently - and move from regular service intervals to bespoke servicing based on high usage of the system and stress of usage due to "heavy procedures". Not all robots are used with the same intensity. Medicaroid is one company that is already looking at this and utilising it.

That type of approach can also move into monitoring the instruments and instrument usage, as AI can predict upcoming patterns of usage and ensure higher or lower instrument supply. But not only that, we could see a move away from fixed "chipped lives" to a method where AI looks at all the aspects of the instrument - visually and telemetric data to see when that particular instrument should really be retired based on its actual real world performance. Every surgery will stress each instrument in different ways, and AI may well be able to start to work that out over the life usage of an instrument.

Finally all that data coming back about patients, systems, instruments and users will be fed back into intelligent AI self design systems - and the robotic systems will design themselves for improvements (that human engineers can implement). The AI will predict different joint lengths in the arms to minimise clash; different motor settings based on what users really need to perform certain procedures. Instrument design changes based on the real world challenges the AI sees, and outcomes of patients. AI will design new (never before thought of) end effectors, and suggested component changes to maximise life and improve usability.

It will all combine to become an AI virtuous circle that will bring continuous improvements to systems and users.

Summary

The later part of this blog may seem a fanciful trip into the "not in my lifetime." But again ChatGPT etc was that for me just two years ago. I think the genie is out of the bottle with AI. And if there is one area of technology that would absolutely benefit from AI... it's surgical robotics. Add to the fact that every major company is pumping in billions in to R&D looking for an edge to beat Intuitive... And well, software is fast and relatively cheap to iteratively develop. It is a fast and effective way to bring cutting edge functionality to robotic systems. So I would not be surprised if surgical robotics sees a huge leap in AI applications in the next 5 to 10 years. Some of this can easily be implemented as it is not even embedded software in the robot - but off system; so has an easier regulatory pathway and faster spin cycle.

I am sure I have missed a lot of other areas AI could be applied to surgical robotics so I look forwards to the comments.

AI is already in use today in surgical robotics - data is already flowing today as video and telemetric data. It's not too far fetched to see all these pieces coming together very soon. Remember, a lot is already in late stages in labs around the world... today. It won't take much to see this research being pushed towards commercial products soon.

Great blog, thank you so much.

One of the most interesting articles I have read related to surgical robotics. If you love this field never hesitate to read it. It made me extremely excited to what’s coming in the near future!